Running LLaMA, a ChapGPT-like large language model released by Meta on Android phone locally.

I use antimatter15/alpaca.cpp, which is forked from ggerganov/llama.cpp.

Alpaca is the fine-tuned version of LLaMA which was released by Stanford University. The alpaca.cpp could run on CPU only mode.

1. Environment#

- Device:Xiaomi Pocophone F1

- Android 13

- SoC:Qualcomm Snapdragon 845

- RAM: 6GB

2. Compile alpaca.cpp#

Please install Termux first. Then choose one methods from below to compile Alpaca.cpp on your device.

Alpaca requires at leasts 4GB of RAM to run. If your device has RAM >= 8GB, you could run Alpaca directly in Termux or proot-distro (proot is slower). Devices with RAM < 8GB are not enough to run Alpaca 7B because there are always processes running in the background on Android OS. Termux may crash immediately on these devices.

Or you could root your phone and setup a chroot environment. Then mount a swapfile to get more RAM on your device.

2.1. Compile Alpaca.app in Termux#

- Install these packages

pkg install clang wget git cmake

- Download Android NDK

wget https://github.com/lzhiyong/termux-ndk/releases/download/ndk-r23/android-ndk-r23c-aarch64.zip

unzip android-ndk-r23c-aarch64.zip

export NDK=~/android-ndk-r23c-aarch64

- Complile Alpaca.cpp and download the model

git clone https://github.com/rupeshs/alpaca.cpp.git

cd alpaca.cpp

mkdir build-android

cd build-android

cmake -DCMAKE_TOOLCHAIN_FILE=$NDK/build/cmake/android.toolchain.cmake -DANDROID_ABI=arm64-v8a -DANDROID_PLATFORM=android-23 -DCMAKE_C_FLAGS=-march=armv8.4a+dotprod ..

make -j8

wget https://huggingface.co/Sosaka/Alpaca-native-4bit-ggml/resolve/main/ggml-alpaca-7b-q4.bin

- Run it

./chat

2.2. In Chroot#

Install Chroot Ubuntu and log in to Ubuntu.

If your devices has RAM lower than 8GB, it is recommened to mount a SWAP file.

dd if=/dev/zero of=/swapfile bs=1M count=8192 status=progress

chmod 0600 /swapfile

mkswap /swapfile

swapon /swapfile

- Install dependencies, compile the program, and download the model.

apt install build-essential wget

git clone https://github.com/antimatter15/alpaca.cpp

cd alpaca.cpp

make chat

wget https://huggingface.co/Sosaka/Alpaca-native-4bit-ggml/resolve/main/ggml-alpaca-7b-q4.bin

- Run it.

./chat

2.3. In Proot#

- Install Proot Debian and log in to Debian.

proot-distro login debian --shared-tmp

- Install dependencies, compile the program, and download the model.

apt install build-essential wget

git clone https://github.com/antimatter15/alpaca.cpp

cd alpaca.cpp

make chat

wget https://huggingface.co/Sosaka/Alpaca-native-4bit-ggml/resolve/main/ggml-alpaca-7b-q4.bin

- Run it.

./chat

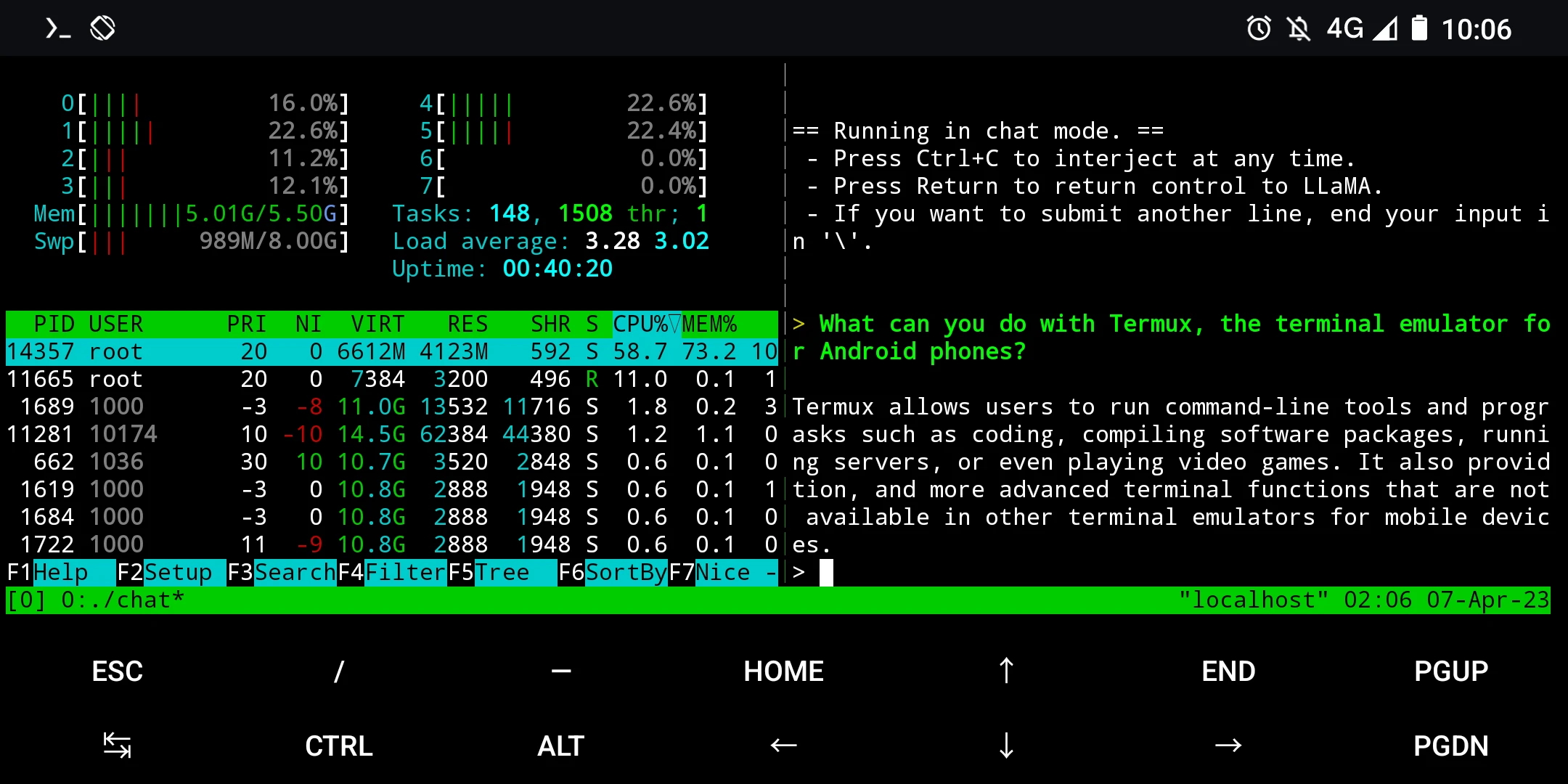

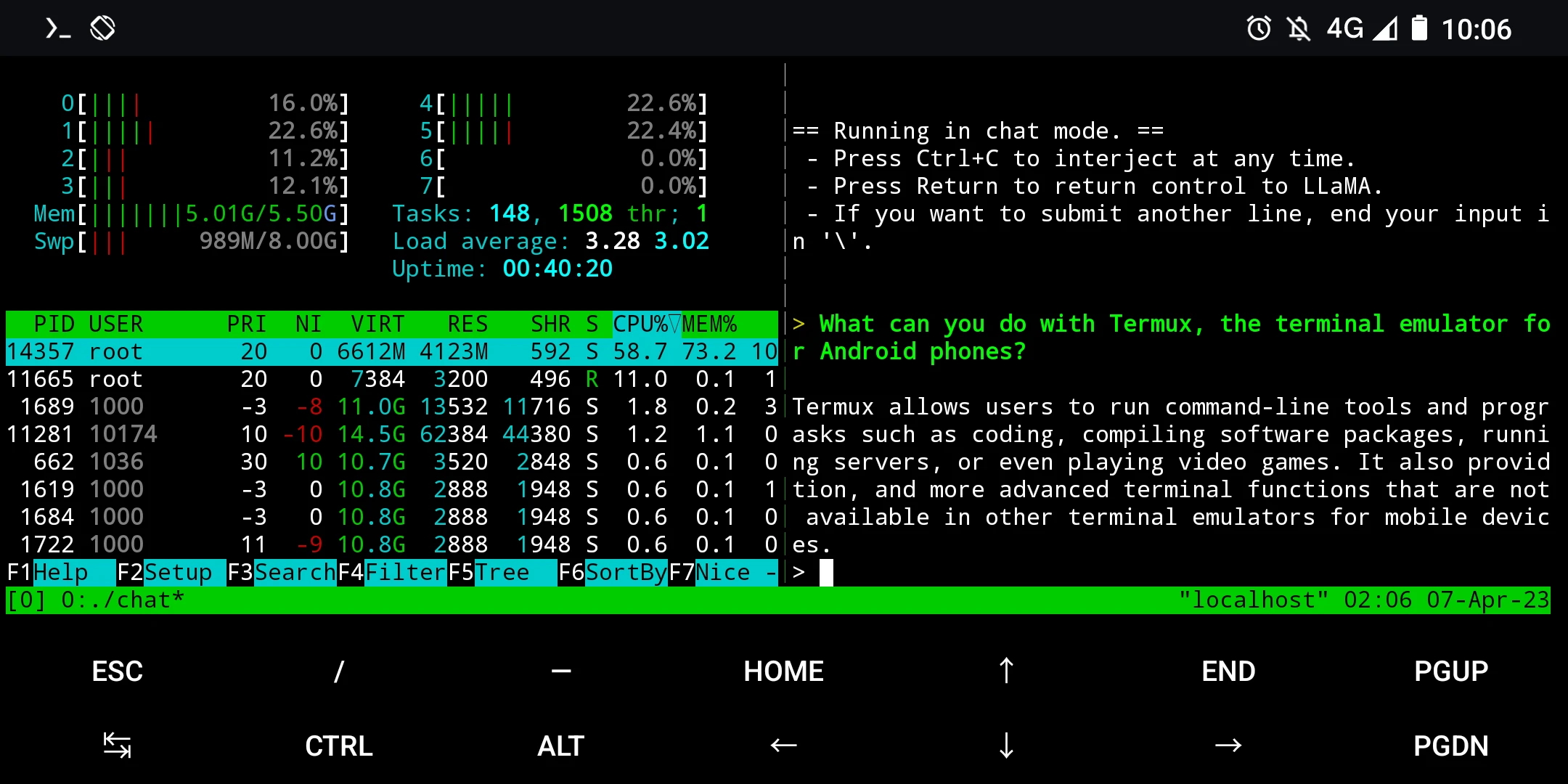

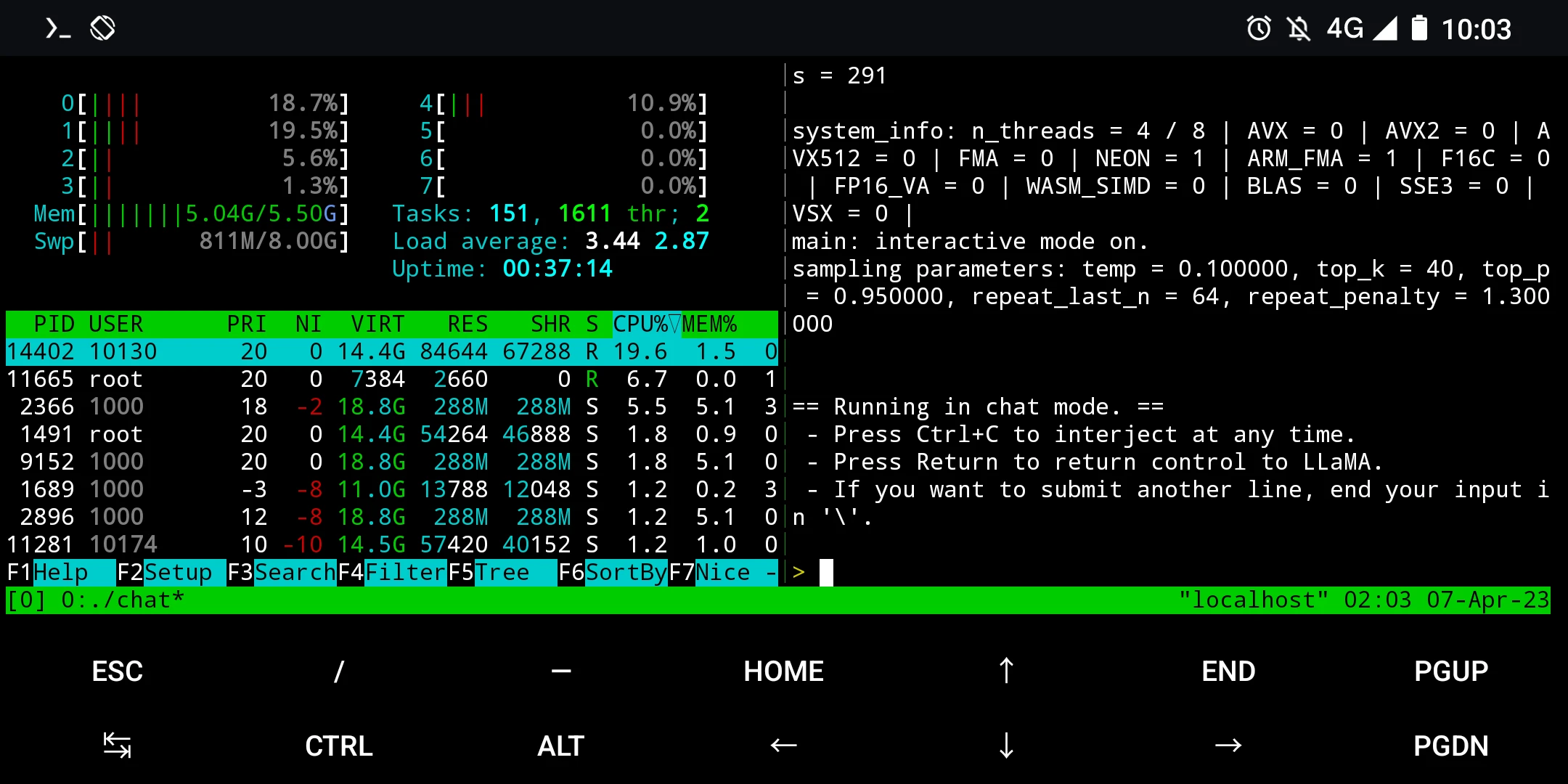

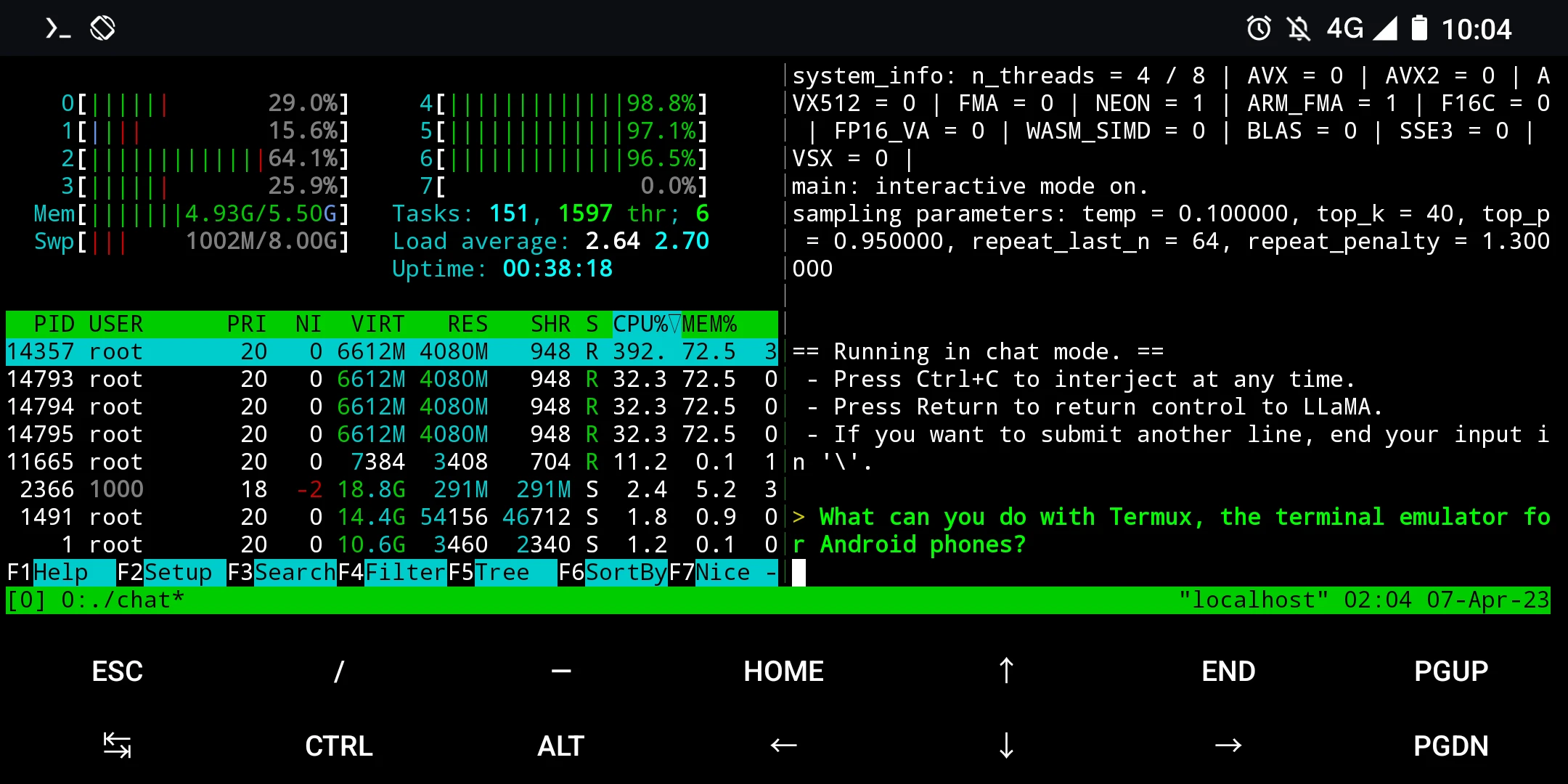

3. Usage#

Now you can start chattig with Alpaca.

It will takes Alpaca 30 seconds to start answering your questions.

What can you do with Termux? Well, this looks like the answers from Termux official website :)